What is GPT or Generative Pre-trained Transformer?

GPT or Generative Pre-trained Transformer, is a state-of-the-art language model developed by OpenAI.

It is a type of machine learning model that uses deep learning techniques to generate natural language text.

The most recent GPT-3 model is trained on a large dataset of text, such as books, articles, and websites.

It uses this training to generate new text that is similar to the text it was trained on.

How GPT or Generative Pre-trained Transformer works?

The GPT model is based on a type of neural network called a transformer, which was introduced in a 2017 paper by Google.

Transformers are particularly good at processing sequential data. An example is text generation.

So how does it work?

GPT takes into account the context of the words that come before and after a given word.

This allows GPT to generate text that is more coherent and makes more sense. Previous models were not able to take context into account which makes GPT far more superior.

GPT can do much more

One of the most interesting things about GPT is its ability to perform a wide range of natural language processing tasks.

These include language translation, question answering, and text summarization.

Any of these are possible because it has been trained on a massive dataset of text. This allows it to understand the relationships between words and phrases in different languages.

GPT can also be fine-tuned for specific tasks, such as answering questions about a specific subject. You can train it on a smaller dataset of text that is relevant to that subject.

This allows GPT to generate more accurate and relevant text for specific tasks.

Another exciting aspect of GPT is its ability to generate creative text, such as poetry and fiction.

GPT can be prompted with a seed text, such as the first line of a poem, and it will generate the next lines, completing the poem.

This opens up a lot of possibilities for creating new and original content.

Limitations of GPT

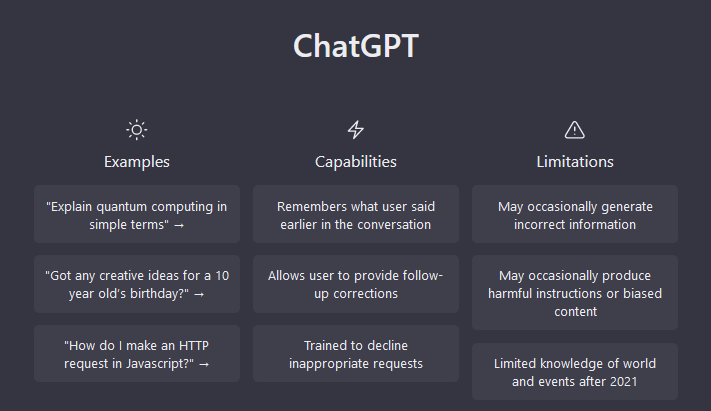

Despite many advanced features, GPT also has limitations.

One of the main issues is its tendency to generate biased text, particularly when it is trained on a dataset that contains biases.

GPT simply models the patterns it sees in the data it was trained on. If the data contains biases, the model will reproduce those biases in its output.

Additionally, GPT also lacks the ability to generate text with a level of creativity and originality like a human can.

It can be seen as a tool that can generate decent text but it doesn't match the complexity and creativity of human mind.

What's next GPT phase?

The next step in GPT development is likely to focus on improving the model's ability to understand and generate text that is more nuanced and contextually aware.

This can include efforts to reduce bias in the model's output, as well as the development of techniques to improve the model's ability to generate more creative and original text.

One approach that is being researched is to use GPT in conjunction with other models, such as reinforcement learning models. This should improve its ability to generate text that is more contextually aware and less biased.

Additionally, research is being done on fine-tuning GPT models on specific tasks.

For example, question answering and text summarization.

Another important area of research is the development of techniques to detect and mitigate the potential risks associated with GPT.

A much larger concern is GPT's ability to generate fake or misleading information.

Therefore, OpenAI is developing techniques to detect when GPT is generating biased or factually incorrect information.

Another direction of research is to make GPT models more energy-efficient and smaller. This should make them more accessible to a wide range of devices and applications.

Conclusion

GPT is a powerful machine learning model that can generate natural language text similar to the text it was trained on.

Its ability to perform a wide range of natural language processing tasks and its ability to generate creative text make it a valuable tool for many applications.

However, it also has limitations, such as its tendency to generate biased text, and it doesn't match the creativity and originality of a human mind.

The next step in GPT development is likely to focus on improving the model's ability to generate text that is more nuanced, contextually aware, and less biased.